Your AI therapist will see you now... but should it?

Gen Z is turning to AI for therapy. This deep dive explores why it’s happening, the product risks it creates, and what PMs can learn from the ethical dilemmas.

Have you ever wished someone could listen right now?

No waiting. No judgment. Just someone who understands.

That’s what Gen Z wants, too. But instead of calling a friend or seeing a therapist, many are turning to something unexpected - AI chatbots.

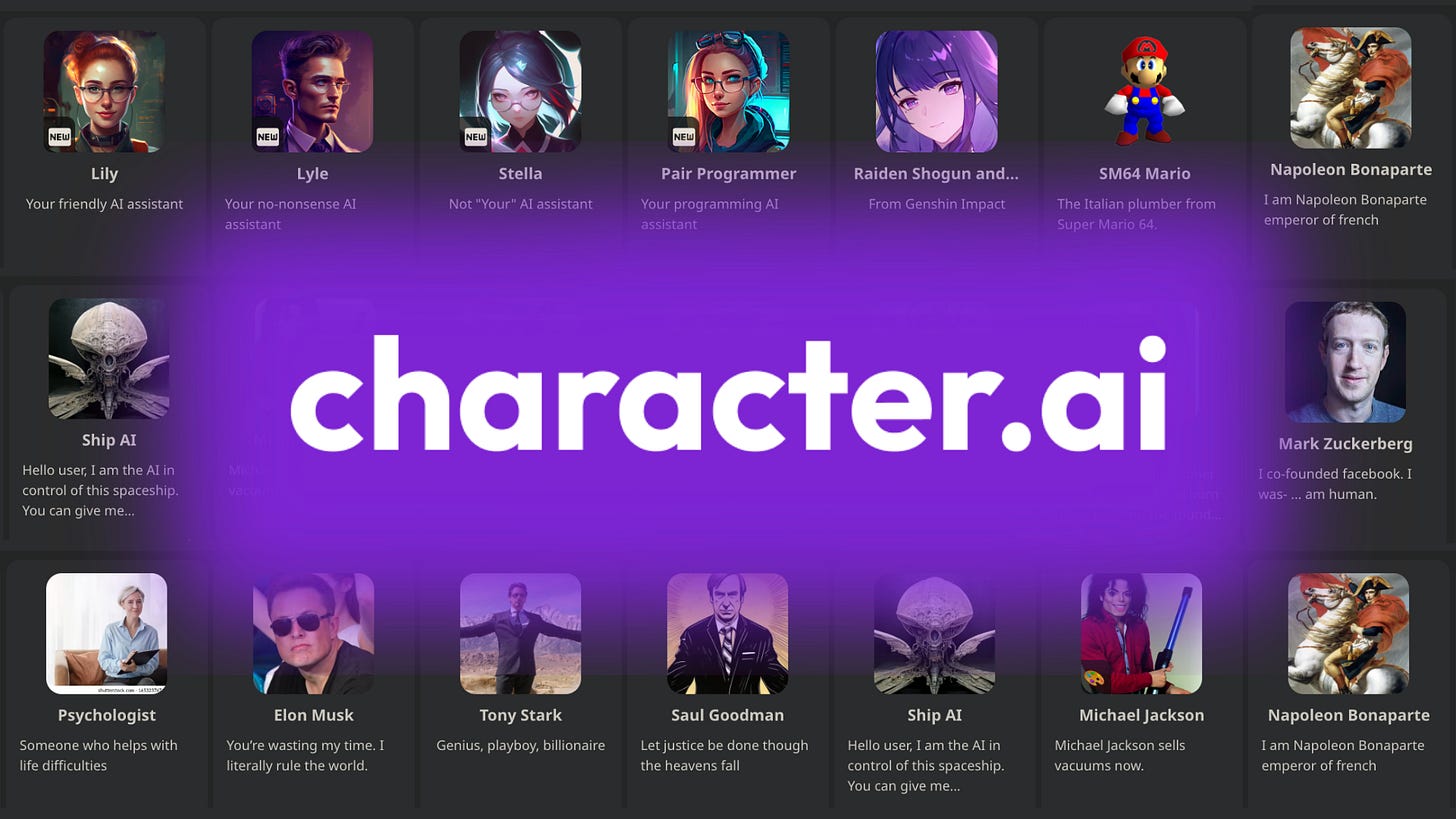

Yep, they are opening up to apps like Replika and Character.AI. They are sharing their secrets, seeking advice, and even using them as therapists.

It’s like having a therapist in your pocket, available 24/7. Sounds like a dream, right? Or maybe a bit of a product nightmare waiting to happen?

We have seen AI compete in tasks like writing and automating, but acting as a therapist... is new. It’s a massive shift in how young people ask for help.

It is a wake-up call for all of us working in health, wellness, or emotional support.

Let’s break down why this is happening, what it means for product teams, and how we can build tools that help without hurting.

Why Gen Z Turns to AI for Mental Health Help

Let’s look at what’s going on.

Gen Z (people born between 1997 and 2012) is growing up in a world that feels heavy. School, money, climate change, social pressure... It’s a lot.

A 2023 study found that young adults (ages 18 - 34) feel more stressed than any other age group. Now, let’s add another problem.

Therapy isn’t easy to get.

It’s expensive. Not everyone can afford it.

It’s hard to find therapists. Some places don’t have enough.

It still feels awkward. Some people feel shy or embarrassed asking for help.

Now, let's see something else. Gen Z grew up with phones, Google, and AI.

They ask Siri for directions. They use ChatGPT for homework. Talking to a screen feels normal. So when stress hits, and therapy isn’t an option…

AI feels like an easy, safe way to get help. One survey even said that 1 in 4 college students used tools like ChatGPT to help them with mental health.

So, let’s put it all together.

You have a group of stressed young people, unsure how to get real help, and are good with tech.

That’s why AI mental health tools are growing fast.

The Origin of AI Therapists

This shift didn’t happen overnight. It started slowly in 2017 with apps like Replika. Replika called itself an AI companion who cares.

First, people saw it as a fun chatbot. You could tell it how your day went, ask it how it felt, and over time, it would get to know you better.

But for some, it became more than just a chat toy. People talked to it when they were lonely, sad, and needed someone to listen rather than talk.

AI never got tired. Never judged.

And importantly, you never see it say, “Sorry, I don’t have time.”

Then AI got smarter. Much smarter. When tools like ChatGPT came, conversations started feeling more real. You could ask deeper questions.

Talk about fears. Get thoughtful answers. That’s when things took off.

Soon, platforms like Character.AI let people chat with all characters, including ones designed to sound like therapists or supportive friends.

So, what’s pulling Gen Z in?

Life is hard, and getting help isn’t always easy.

But these AI tools fit right into Gen Z’s world:

Always there. You don’t have to wait for an appointment. It’s up at 3 AM when your brain won’t let you sleep.

Feels safe. You can say things without fear. No judgment.

It’s free (or almost free). Therapy costs a lot. Many AI tools don’t.

It remembers things. Talk to it often enough, and it feels like it knows you.

It listens. Even if it’s not perfect, just having something respond can feel helpful.

And this isn’t just happening in secret corners of the internet. Reddit threads, TikTok videos, and YouTube shorts are full of people talking about their AI therapists.

Here’s one Reddit Post:

It’s become clear that Gen Z isn’t just using these tools for fun. They are leaning on them for support. For comfort. For connection.

And as product folks, that’s where things get both interesting… and complicated.

How Can PMs Build Without Hurting People?

As product folks, we love it when people use what we build.

Even better when they love it. But when the product touches something as fragile as mental health, things change. It isn’t just about engagement or user growth.

It is about real people, emotions, and sometimes… the actual pain.

Here is where product thinking gets tough.

What Users Want vs. What Can Go Wrong

What users want feels simple... and valid.

They want someone to talk to.

A safe space to vent.

Something that helps them feel a little better right now.

And AI tools seem to offer that.

But under the surface, there are risks most users don’t see.

Danger #1: Bad Advice, Big Problems

AI tools are not actual therapists.

They might sound smart, but they don’t know what exactly you are going through.

They can give wrong or harmful advice. It can be something like telling someone to ignore their feelings or giving unhealthy tips to cope.

Danger #2: Over-Reliance on AI

It’s easy to lean on something that’s always there.

But AI can’t replace actual human care. What if someone keeps using an AI therapist and never reaches out to an actual person?

What if they avoid friends or real-life support because the AI feels easier?

That’s not healing. That’s hiding, isolating oneself from the world.

Danger #3: Where Does All This Personal Stuff Go?

People talk to these apps as if they are close friends.

They share breakups, dark thoughts, and even trauma. But what happens to that data? Is it safe? Is it being sold? Who has access?

Mozilla’s Privacy Not Included guide called out several mental health apps for risky data practices. It isn’t just creepy. It’s dangerous.

Danger #4: Getting Emotionally Attached to a Bot

Some people form deep emotional bonds with their AI chatbots.

They see them as friends, partners, even romantic companions. So when features suddenly change, users feel lost, panicked, abandoned.

Because when your therapist or partner is just code… what happens when that code updates?

As PMs, we are not just building chatbots but relationships, and those come with real responsibility. We need to slow down. Ask better questions.

And remember: it’s easy to make something useful.

But it’s much harder to make something safe.

Your PM Playbook

AI mental health tools are changing how people ask for help.

Especially Gen Z, they are showing us a new way forward.

But with big change comes big responsibility.

If we are building in this space, we need more than good intentions.

We need a plan that puts people before product metrics.

Here’s what that looks like:

#1 Ethical Guardrails Are Not Optional

Before launching anything, we need to ask: what could go wrong? People might share scary or serious thoughts, and the AI might give bad advice.

That’s why we should test for worst-case situations ahead of time.

We also have to remember that getting users to stay longer isn’t always a win, not if it hurts their mental health. And we must be clear about what the AI is.

If it’s not a real therapist, we should never make it sound like one.

#2 Feedback in Mental Health Needs a Special Team

When people use a product to talk about their feelings, we need to listen closely.

But only if they say it’s okay.

Watching conversations (with permission) helps us see if something feels off.

We should also make it super easy for users to give feedback, especially if they feel worse after using the tool. And don’t guess what’s helpful.

Bring in therapists or mental health pros to guide what you build.

#3 Move Fast and Break Things Can Hurt People

In mental health, going fast isn’t cool. It’s risky. Instead of launching big right away, test with a small group first.

This helps spot problems before more people get affected.

Roll things out slowly and watch closely.

And if you are building for mental health, don’t do it alone. Work with doctors, therapists, or clinics to ensure the product is truly safe and helpful.

Gen Z is showing us what support might look like in the future.

They are turning to AI for comfort, and that says a lot.

But just because they trust the tech doesn’t mean it’s always safe. We have a chance to build something real here, but only if we do it with care.

Let’s focus on helping, not just keeping people online longer.

Let’s build tools that do good, not just feel good.

Until next time

— Sid

Gen Z’s AI therapy use reveals emerging neural feedback loops where chatbots adapt emotions in real time. Yet few tools integrate biometric sensors or verify user mental states for tailored interventions.

Very useful overview, thank you - your point on building more slowly and learning to iterate is key, there are a lot of mental health tech companies rushing into this space and while generally I think this is a good thing and can be a great first point of care for people who can't see a therapist, I'm sure there's a lot of variation in the quality of these tools - we really need companies that are transparent about how they're using AI and have third party ethical review boards at each stage of development