Fundamentals Of AI Part 1 - AI, ML, DL, LLM And GenAI

AI product managers need to understand the fundamentals that power AI products. In this edition, we break down AI, ML, DL, LLM and GenAI

New Masterclass: How do I know if my idea needs AI?

You’ve got a great idea. But does it really need AI?

The truth: Most product teams are adding AI because they’re afraid to miss out. But the best builders aren’t chasing trends. They’re solving real problems with AI as the solution, not the starting point. The difference? A clear framework and the confidence to know when to say “yes” or “no” to AI.

In this FREE masterclass, you’ll get the exact system to evaluate whether AI is the right move for your product, before you waste months and resources building the wrong thing.

When: November 22, 2025, 4:30 PM IST | 11 AM GMT | 6 AM EST

When most people hear “artificial intelligence,” they picture a machine suddenly waking up, becoming conscious, and taking over the world.

The reality is far less dramatic, yet far more interesting.

Today’s AI isn’t about robot brains or sci-fi revolutions. It’s about software that learns, adapts, and helps us make better decisions.

For a product manager, understanding this difference is essential. Because once you know what machine intelligence actually means, you start building better products.

This series of posts will give you that clarity. Starting right now.

Let’s dive in.

Artificial Intelligence

Before we talk about Machine Learning or ChatGPT, let’s start with the basics: what even is Artificial Intelligence?

At its core, Artificial Intelligence (AI) means making machines think and act like humans. Not perfectly, not consciously. Just smart enough to make decisions, learn from experience, or solve problems.

When Gmail finishes your sentence, when Netflix recommends a show, or when Google Maps reroutes you to avoid traffic, that’s AI in action.

Each of these systems is copying one small piece of how humans think: predicting, recognizing, or reasoning.

Before AI, computers could only follow fixed rules.

If engineers wanted a machine to recognize a cat, they had to write instructions like:

“If the image has two triangles on top and whisker lines in the middle, it’s probably a cat.”

That worked for simple cases.

But real life isn’t that straightforward. Cats look different, lighting changes, and angles confuse the rules.

As the world got messy, the rules broke.

So scientists asked a new question:

“What if, instead of giving the computer all the rules, we let it learn the rules by itself?”

That’s how Machine Learning (ML) was born: the idea that a computer can learn from data instead of being told what to do.

In other words:

AI is the goal → making machines smart.

ML is the method → teaching machines to learn from examples.

Machine Learning

Machine Learning, or ML, is a way for computers to learn by looking at examples, not by following rules.

Before ML

Computers only did what we told them to do.

For example, you could write a rule like:

“If an email says the word lottery, mark it as spam.”

That works for a while.

But spammers change words.

They write “lotttery” with extra letters.

Or they say “you won a prize” instead.

The rule breaks.

How ML Is Different

With ML, we don’t make rules.

We show the computer lots of emails, some labeled “spam” and some labeled “not spam.” The computer looks at all of them and starts to notice patterns:

spam emails often sound urgent

some have weird links

many have strange spelling

some come at odd times

We never tell the computer these rules. It figures them out on its own.

A Simple Way to Think About It

Think about how you learned to recognize a dog.

No one gave you a list of rules like “a dog has four legs and a tail.” You just saw many dogs. Your brain noticed the pattern.

ML works the same way, except the computer uses numbers, not feelings.

It takes data → finds patterns → makes predictions.

How ML Shows Up in Real Life

You see ML every day:

email spam filters

Netflix and YouTube recommendations

voice assistants like Siri and Alexa

maps that guess how long your drive will take

These systems get better when they see more examples.

More data = better learning.

Why Good Data Matters

ML is smart only if the data is good.

Engineers have a saying:

“Garbage in, garbage out.”

If the data is messy, wrong, or unfair, the computer will learn the wrong thing.

Example: Imagine a delivery app trying to guess delivery time.

But most of its training data came from rainy days.

Rainy days have slow traffic.

So the model learns:

“Delivery is always slow.”

Now, even on sunny days, the app keeps saying:

“Your order will be late.”

Not because it’s true, but because the data taught it the wrong pattern.

The lesson is simple:

ML is only as smart as the data you give it.

Why We Needed Something More

ML helped computers learn basic patterns.

But some jobs are much harder:

understanding speech

recognizing faces

driving a car

translating languages

Those tasks need deeper learning, not just simple patterns.

That’s why we built Deep Learning.

Deep Learning

If Machine Learning taught computers to learn from examples, Deep Learning (DL) taught them to learn from raw experience (just like humans do.)

DL a special type of ML inspired by how our brains work. It uses something called a neural network: layers of tiny digital “neurons” that pass information to one another.

How It Works (Without the Math)

Imagine you’re teaching a computer to recognize a cat.

The first layer of neurons might notice simple patterns, like lines or edges.

The next layer combines those edges into shapes, maybe eyes or ears.

A few layers later, it can recognize an entire object, a cat, a car, or even your face.

No one tells it what to look for. It figures that out on its own as information flows through these layers.

That’s what makes deep learning so powerful: it can learn directly from raw data (photos, audio, text) without humans telling it what matters.

Why It Changed Everything

Before deep learning, AI could analyze but not understand. Now, machines can recognize speech, translate languages, spot diseases in X-rays, and identify objects in real-world photos, all because they can find their own patterns.

Training these models takes massive amounts of data and computing power, often millions of examples and weeks of processing on powerful GPUs.

For years, only big research labs could afford that.

But as the internet exploded with data and hardware got cheaper, deep learning moved from research labs into everyday products, from voice assistants to photo apps.

It became the engine behind almost every modern AI system.

The Turning Point

And from this foundation came something even bigger: models that don’t just analyze information, but can create it.

That’s where Large Language Models (LLMs) and Generative AI come in.

LLMs: Machines That Speak

Deep learning gave computers the ability to see, hear, and identify patterns. But there’s one skill that remained out of reach for decades.

That is understanding and generating language.

Human language is messy. The same idea can be said in a hundred ways. Words carry emotion, context, humor, and hidden meaning.

For a long time, machines could read words but not grasp them. They could store and search text, but they couldn’t produce it in a way that felt human.

Now, making that possible is Large Language Models (LLMs).

It’s the very technology behind tools like ChatGPT, Gemini, and Claude.

At first glance, these models feel like magic. You ask a question in plain English, and the system responds with a clear, thoughtful answer.

You can chat, debate, brainstorm, and even joke with it. It feels like the machine understands. But what’s really happening underneath is simpler and more fascinating.

How Language Models Learn

An LLM is a kind of deep learning model trained specifically on text.

During training, it reads huge amounts of writing, books, articles, Wikipedia pages, websites, code snippets, chat logs, and even social media posts.

Its goal is to learn how language flows.

It does this through a surprisingly basic task: predicting the next word.

If you write, “The sky is…”, the model looks at all the text it has ever seen and predicts what usually comes next. “Blue” is likely. “Green” is rare.

By doing this billions of times, the model becomes extremely good at predicting what word (or phrase) fits next in almost any context.

This process is called training.

When the model gets a word wrong, it learns from the mistake. It changes tiny parts inside itself, making some connections stronger and others weaker, just like your brain does when you practice a new skill.

After seeing billions of sentences, it develops an internal “feeling” for how words fit together, much like how you develop a feel for grammar after years of reading and writing. So when you ask an LLM,

“Write a short note apologizing for a delayed shipment,”

it doesn’t search the internet for an example. It generates one word by word, based on patterns it has learned:

“We’re sorry for the delay in delivering your order. Our team is resolving the issue, and your package will arrive soon. Thank you for your patience.”

It sounds intelligent because it knows what an apology sounds like because it has seen millions of examples of humans expressing regret.

Why LLMs Feel So Smart

When a model masters language, it begins to simulate thought.

That’s what makes LLMs powerful.

They can reason through language alone.

They can summarize a document, write a plan, or explain a concept by finding the most probable sequence of words that fits the prompt.

But the model doesn’t know what’s true.

It doesn’t have memory, beliefs, or awareness. It generates what sounds plausible based on text-based patterns it has learned. That’s why it sometimes produces wrong but confident answers, a problem called hallucination.

If you ask for a list of AI PM books, it might invent a few that sound real but don’t exist. It isn’t lying; it’s guessing. The system simply doesn’t have a concept of truth.

As a product manager, when you work with an LLM, you are designing around probabilities, not guarantees. You are managing confidence instead of accuracy.

And you need to think about how to keep the user in control when the AI sounds certain but might be wrong.

That’s why many modern AI systems combine LLMs with retrieval tools, systems that bring in verified facts from external sources before the LLM answers.

We will explore those RAG in an upcoming post.

The Scale of “Large”

The “large” in Large Language Models refers to two things: the size of the model (how many internal parameters it has) and the size of the dataset it’s trained on.

A small language model might have a few hundred million parameters.

Modern LLMs like GPT-4 have hundreds of billions. Each parameter helps capture subtle relationships between words and ideas. More parameters usually mean a deeper and more flexible understanding of language.

But there’s a trade-off. The bigger the model, the more expensive it is to train and run.

That’s why companies now experiment with Small Language Models (SLMs), faster, lighter versions trained for specific use cases.

The idea isn’t always “bigger is better.” It’s “right-sized for the job.”

As an AI PM, that’s one of the first trade-offs you will learn to evaluate: the balance between model power, cost, and latency.

Generative AI: From Reading to Creating

Once computers learned how to understand language, the next step was teaching them how to make language.

This is where Generative AI comes in.

What Generative AI Means

Generative AI, or GenAI, is a type of AI that can create new things, like text, pictures, music, or even computer code.

Think of it like this:

A search engine shows you things that already exist.

A generative AI makes something new just for you.

If you say:

“Write me a business plan.”

A search engine just finds websites about business plans.

But a generative AI writes one from scratch, using what it has learned from reading millions of examples.

That’s why it’s called generative — because it generates new content.

Why This Is a Big Deal

Before GenAI, software was like a vending machine:

You push a button.

It gives you the same thing every time.

With GenAI, software is more like a teammate:

You ask for an idea.

It gives you something new each time.

You say “make it shorter,” “try again,” or “more fun,” and it learns your style.

It works with you.

What People Can Do With It

GenAI helps different people in different ways:

A designer can make 50 logo ideas in one minute.

A writer can get an outline instantly.

A product manager can create user stories or PRDs in seconds.

The AI doesn’t replace your creativity. It just removes the scary “blank page.”

You still decide what’s good and what’s not.

Why This Changes Product Management

In old software, product managers designed:

buttons

menus

screens

fixed steps

Users clicked through the options we created.

With GenAI, the user doesn’t click buttons. The user talks.

Their message becomes the new “button click.”

Examples:

“Explain this contract to me.”

“Help me plan a trip.”

“Write a polite email.”

This means PMs now design conversations, not screens.

How AI Learns From Users

When a user:

edits the AI’s answer

presses “try again”

or says “make it funnier / shorter / simpler”

They are giving the AI feedback.

Each small correction is like saying:

“Here’s what better looks like.”

The system uses this to adjust future answers.

Over time, the AI learns:

the tone users like

the style they prefer

the type of help they expect

Not because we programmed every detail, but because the users taught it through conversation.

How PMs Build With GenAI Now

Building AI products is different because:

1. You can’t plan every answer

In traditional apps, you know exactly what the software will do.

With GenAI, you can’t write all the possible answers. There are too many.

2. You guide instead of control

You don’t script every response.

You guide the AI using:

examples

limits

prompts

safety rules

feedback loops

3. Your job is shaping behavior

Instead of saying “do this,” you say:

“Here are the guardrails. Stay inside them.”

Over time, the system learns how to behave the way your users need.

(More on guardrails in upcoming posts.)

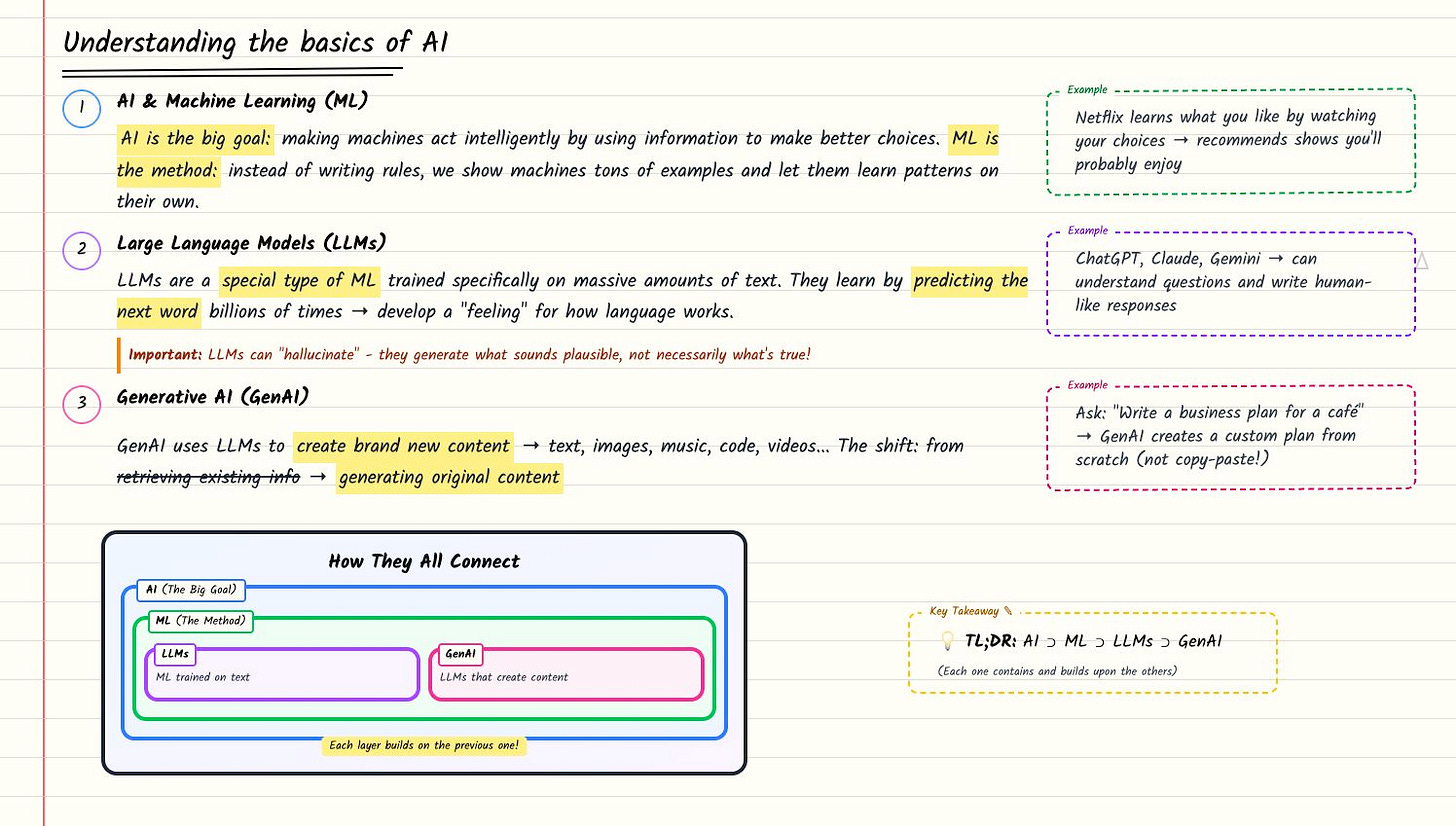

Bringing It All Together: AI, ML, LLMs, And GenAI

At this point, it’s easy to get lost in the acronyms. Let’s connect the dots.

Artificial Intelligence (AI) is the goal: making machines act intelligently.

Machine Learning (ML) is the method: teaching them to learn from data.

Deep Learning (DL) is the technique: using neural networks with many layers to find complex patterns.

Large Language Models (LLMs) are deep learning systems trained on text, capable of generating fluent language.

Generative AI (GenAI) is what happens when these models start creating new things, such as text, code, or art, rather than just analyzing data.

Each step builds on the one before it.

AI is the dream, ML is the approach, DL is the technology, LLMs are one application, and GenAI is the outcome that feels the most human.

Understanding this stack helps you think clearly about what kind of system your product needs. Sometimes, a simple ML model will solve the problem faster and cheaper than an LLM.

Other times, only a generative model can create the kind of flexible, human experience users expect.

In a Nutshell

Large Language Models and Generative AI mark a turning point in how we build and use technology. For decades, software followed instructions.

Now, it collaborates. It learns, adapts, and creates alongside us.

Understanding the path that led here, from AI to ML to DL to LLMs to GenAI, gives you the mental map every modern product manager needs.

It helps you see what’s possible, what’s practical, and what’s just hype.

In the chapters ahead, we will go deeper into how these models “remember” information: how they store context, retrieve facts, and avoid hallucinations.

But before we move forward, pause here and notice something important:

The most advanced AI systems in the world are still reflections of our data and our design choices. They reflect what we teach them.

Our clarity, our biases, our intentions.

Intelligence is artificial. The responsibility is not.

That’s it for today.

Stay tuned for part 2 of this series. We will cover RAG and how it works.

Until then

—Sid

Nice article. A simple explanation to what seems complex. Looking forward to the next part!

Hi .. not able to sign up in the CTA of this page