Should You Let AI Write Your Jira Tickets?

AI promises to save hours on ticket writing. Your team is ready to test it. But what if it gets the requirements wrong? You decide.

Let’s be real!

Writing Jira tickets isn’t the fun part of the job.

You have got product specs, Slack notes, and maybe a Notion doc or two, and now it’s your job to turn all that into clean, developer-ready tickets.

It takes time. Sometimes, a lot of time.

Especially when you are trying to get the wording right or remember edge cases.

Now, AI tools like Atlassian Intelligence and Linear’s built-in AI can auto-generate user stories. They will fill in the ticket, summary, and even acceptance criteria in seconds.

These tools promise to:

Save hours each week

Make your stories easier to read

Help devs move faster with better context

But early feedback is split. Some PMs love the speed.

Others say the summaries are too generic and sometimes miss key logic.

Now your engineering lead drops the question:

Should we turn this on for our next sprint?

The Problem

Manually writing tickets takes up hours every week, but it’s also where you build so much context. This AI tool could help you move faster, but there's a tradeoff:

You save time

You risk missing critical details

What if the AI skips an edge case?

Or write acceptance criteria that sound fine but confuse your devs later?

You are stuck balancing speed vs. clarity. It’s tempting to automate, but one vague ticket could turn into a week of back-and-forth and missed deadlines.

Example:

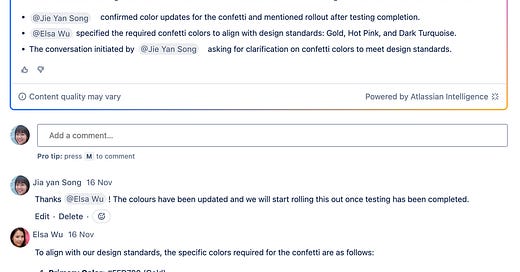

This is the AI summarized Jira ticket:

If I were to write it, I would have given more details:

As a Jira user, I want to see a confetti animation when I move tickets

to "Done" so that I feel motivated and celebrate completing tasks.

Acceptance Criteria:

- Confetti animation triggers when status changes from "In Progress" to "Done"

- Animation lasts 2-3 seconds and doesn't block other actions

- Uses brand colors: Gold (#FFD700), Hot Pink (#FF69B4), Dark Turquoise (#00CED1)

- Animation works on both desktop and mobile views

- User can dismiss animation early by clicking anywhere

Definition of Done:

- [ ] Animation triggers correctly on status change

- [ ] Colors match specifications exactly

- [ ] No performance impact on page load

- [ ] Tested on Chrome, Firefox, SafariSee the difference? The AI version reads like meeting notes. The human version gives developers everything they need to actually build the feature.

Your Options

1. SHIP

Turn on AI summaries for all tickets, starting now

You go all in. AI handles the first draft of every Jira ticket. The team edits and reviews them before development starts, but most of the writing is automated.

Upside: Huge time savings. Your team can have cleaner summaries faster.

Risk: If the AI misses details or uses vague language, your devs could waste time asking for clarification, or worse, build the wrong thing.

2. SKIP

Stick with your current process: humans write it all

You keep doing what you are doing. PMs write every story manually, with full control over what goes in and how it’s worded.

Upside: No surprises. You know exactly what’s being written and why.

Risk: You stay stuck in a time-heavy process. Your team may slow down if your backlog is large or specifications are long.

3. WAITLIST

Test it with one small feature first

You run a short pilot. Pick one small, low-risk feature.

Use the AI to generate tickets just for that. Compare the AI’s version with a human-written version. Ask the devs which one they prefer.

Upside: You get real feedback with minimal risk. You also learn how much editing AI tickets actually need.

Risk: You won’t see the full benefits right away. And it may take a sprint or two before you have enough feedback to decide.

You Decide

Think through:

What factors matter most for your decision?

Team experience level? Project complexity? Risk tolerance?

JAPM's Take

We would go with WAITLIST. Because AI summaries are super promising, but Jira tickets are too important to leave fully to automation.

One vague or confusing ticket can derail an entire sprint. You will end up answering 10 follow-up questions, clarifying edge cases, and rewriting half the story mid-week.

Instead, we would run a low-stakes test:

Pick one small feature (nothing high-risk)

Let AI draft the stories

Review and tweak manually before assigning

Track how much editing was needed and how the devs felt

Think of it like…

Hiring a junior PM to write stories, but you still need to review their work. The goal isn’t to save every minute, but to make planning easier without sacrificing quality.

AI can speed up writing Jira tickets, but don’t trust it blindly.

Test it carefully, get your team’s feedback, and keep human review in the loop.

What did you choose? The best PMs know that new tools are only as good as how thoughtfully you implement them. Answer the poll and comment below.

I often iterate with AI and then modify the details to make it more company/team language.

Agree with importance and that you shouldn't let AI handle it by itself. I wonder if you could improve quality drastically by providing 5 extraordinary tickets from the past as example (not sure if technically possible in those tools but definitely with ChatGPT).

When tickets are written by AI and the code is implemented by AI, maybe we need to overthink this process anyway :D